项目地址:

https://github.com/vastsa/FileCodeBox

前期准备:

- 一个cloudflare账号

- 一个huggingface账号

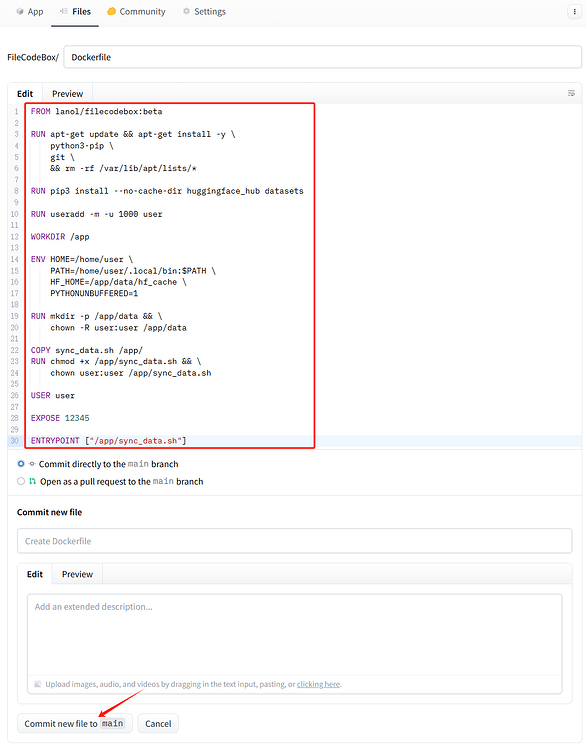

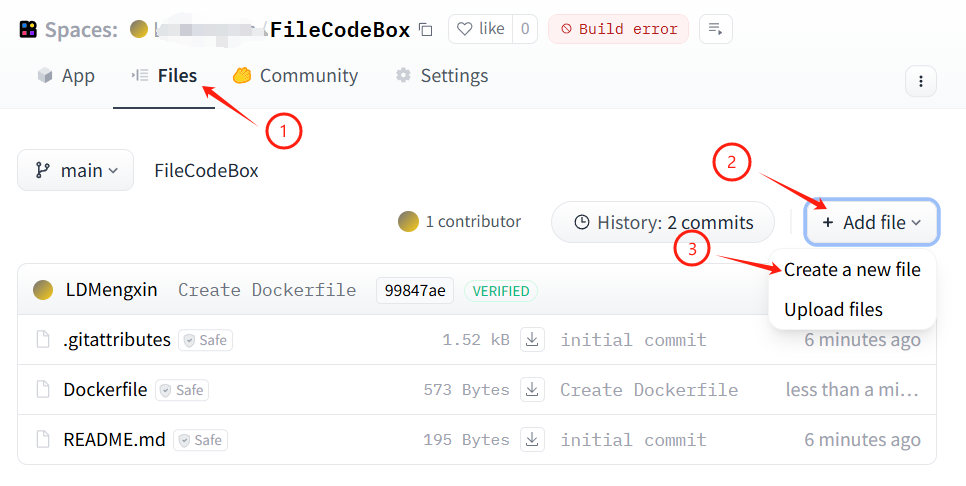

创建dockerfile

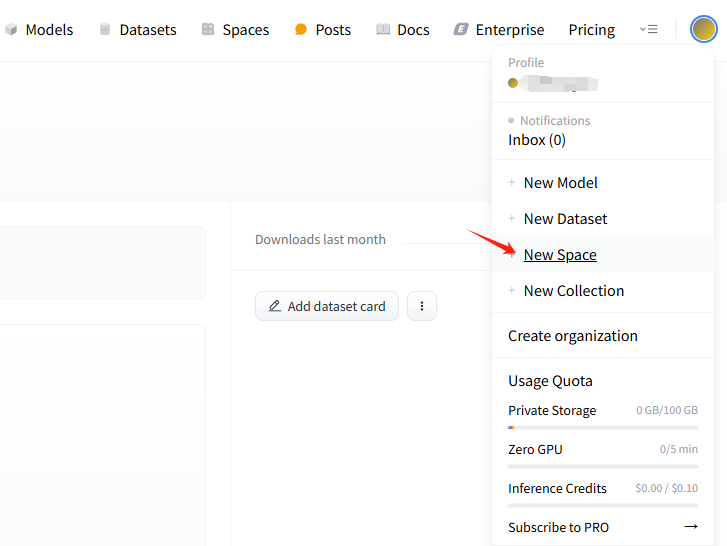

注册好huggingface后,点击头像新建一个空间:

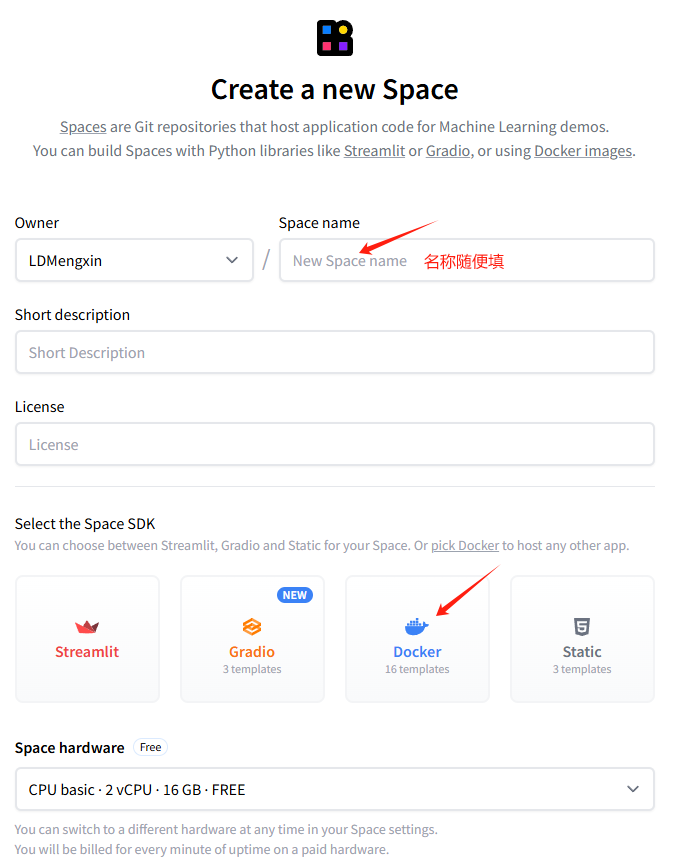

选择用docker部署:

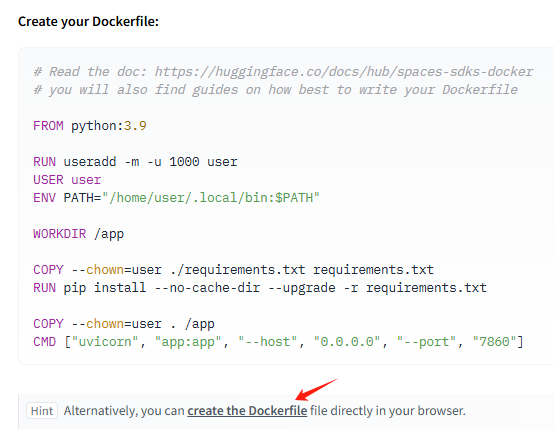

然后划到最底,点击左下角的

填入以下内容,然后点击main

1FROM lanol/filecodebox:beta

2

3RUN apt-get update && apt-get install -y \

4 python3-pip \

5 git \

6 && rm -rf /var/lib/apt/lists/*

7

8RUN pip3 install --no-cache-dir huggingface_hub datasets

9

10RUN useradd -m -u 1000 user

11

12WORKDIR /app

13

14ENV HOME=/home/user \

15 PATH=/home/user/.local/bin:$PATH \

16 HF_HOME=/app/data/hf_cache \

17 PYTHONUNBUFFERED=1

18

19RUN mkdir -p /app/data && \

20 chown -R user:user /app/data

21

22COPY sync_data.sh /app/

23RUN chmod +x /app/sync_data.sh && \

24 chown user:user /app/sync_data.sh

25

26USER user

27

28EXPOSE 12345

29

30ENTRYPOINT ["/app/sync_data.sh"]

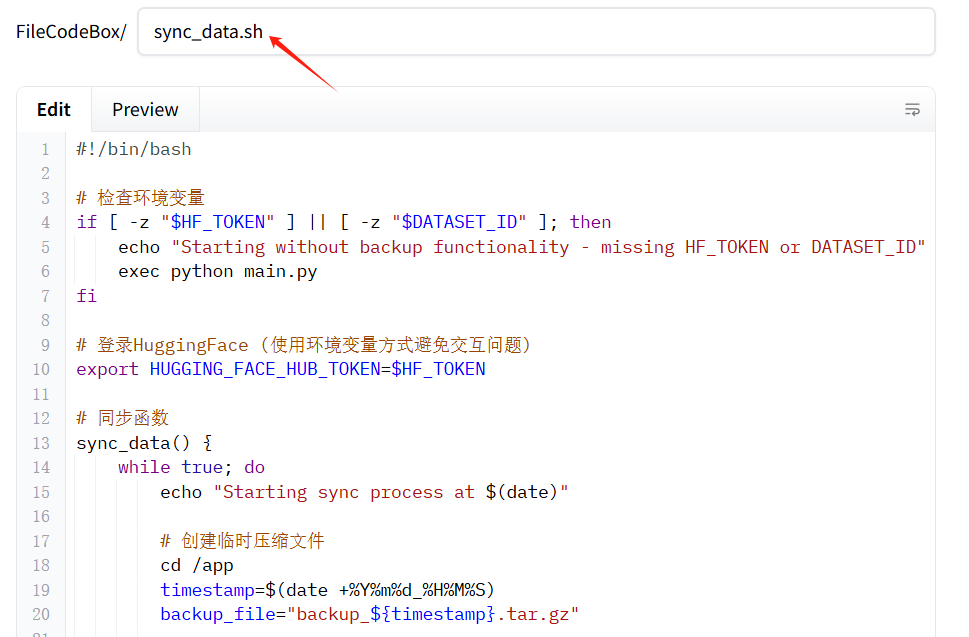

创建sync_data.sh文件

1#!/bin/bash

2

3# 检查环境变量

4if [ -z "$HF_TOKEN" ] || [ -z "$DATASET_ID" ]; then

5 echo "Starting without backup functionality - missing HF_TOKEN or DATASET_ID"

6 exec python main.py

7fi

8

9# 登录HuggingFace (使用环境变量方式避免交互问题)

10export HUGGING_FACE_HUB_TOKEN=$HF_TOKEN

11

12# 同步函数

13sync_data() {

14 while true; do

15 echo "Starting sync process at $(date)"

16

17 # 创建临时压缩文件

18 cd /app

19 timestamp=$(date +%Y%m%d_%H%M%S)

20 backup_file="backup_${timestamp}.tar.gz"

21

22 tar -czf "/tmp/${backup_file}" data/

23

24 python3 -c "

25from huggingface_hub import HfApi

26import os

27def manage_backups(api, repo_id, max_files=50):

28 files = api.list_repo_files(repo_id=repo_id, repo_type='dataset')

29 backup_files = [f for f in files if f.startswith('backup_') and f.endswith('.tar.gz')]

30 backup_files.sort()

31

32 if len(backup_files) >= max_files:

33 files_to_delete = backup_files[:(len(backup_files) - max_files + 1)]

34 for file_to_delete in files_to_delete:

35 try:

36 api.delete_file(path_in_repo=file_to_delete, repo_id=repo_id, repo_type='dataset')

37 print(f'Deleted old backup: {file_to_delete}')

38 except Exception as e:

39 print(f'Error deleting {file_to_delete}: {str(e)}')

40try:

41 api = HfApi()

42 api.upload_file(

43 path_or_fileobj='/tmp/${backup_file}',

44 path_in_repo='${backup_file}',

45 repo_id='${DATASET_ID}',

46 repo_type='dataset'

47 )

48 print('Backup uploaded successfully')

49

50 manage_backups(api, '${DATASET_ID}')

51except Exception as e:

52 print(f'Backup failed: {str(e)}')

53"

54 # 清理临时文件

55 rm -f "/tmp/${backup_file}"

56

57 # 设置同步间隔

58 SYNC_INTERVAL=${SYNC_INTERVAL:-7200}

59 echo "Next sync in ${SYNC_INTERVAL} seconds..."

60 sleep $SYNC_INTERVAL

61 done

62}

63

64# 恢复函数

65restore_latest() {

66 echo "Attempting to restore latest backup..."

67 python3 -c "

68try:

69 from huggingface_hub import HfApi

70 import os

71

72 api = HfApi()

73 files = api.list_repo_files('${DATASET_ID}', repo_type='dataset')

74 backup_files = [f for f in files if f.startswith('backup_') and f.endswith('.tar.gz')]

75

76 if backup_files:

77 latest = sorted(backup_files)[-1]

78 api.hf_hub_download(

79 repo_id='${DATASET_ID}',

80 filename=latest,

81 repo_type='dataset',

82 local_dir='/tmp'

83 )

84 os.system(f'tar -xzf /tmp/{latest} -C /app')

85 os.remove(f'/tmp/{latest}')

86 print(f'Restored from {latest}')

87 else:

88 print('No backup found')

89except Exception as e:

90 print(f'Restore failed: {str(e)}')

91"

92}

93

94# 主程序

95(

96 # 尝试恢复

97 restore_latest

98

99 # 启动同步进程

100 sync_data &

101

102 # 启动主应用

103 exec python main.py

104) 2>&1 | tee -a /app/data/backup.log

然后划到最底,点击左下角的main

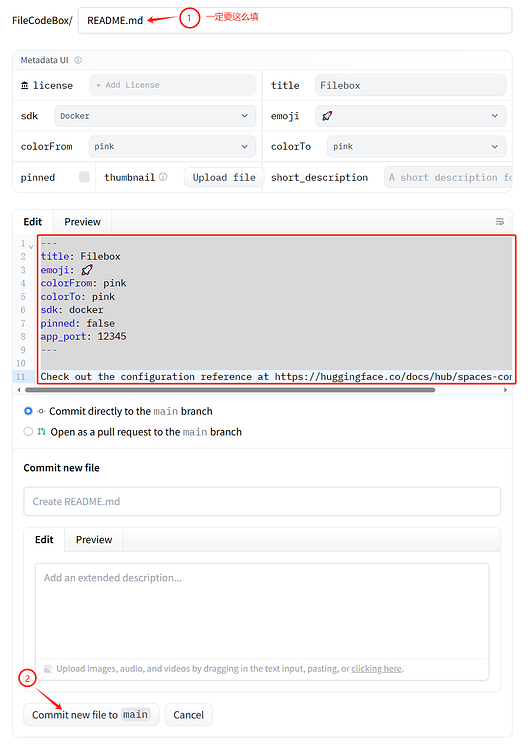

修改README.md

跟上面创建新文件一样,新建README.md文件,填入:

1---

2title: Filebox

3emoji: 🚀

4colorFrom: pink

5colorTo: pink

6sdk: docker

7pinned: false

8app_port: 12345

9---

10

11Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

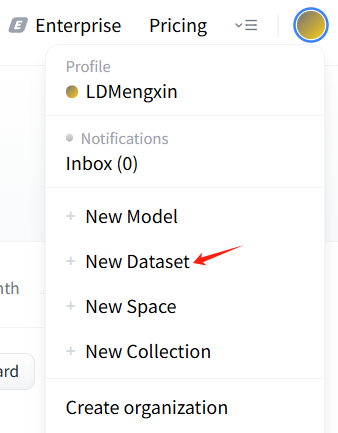

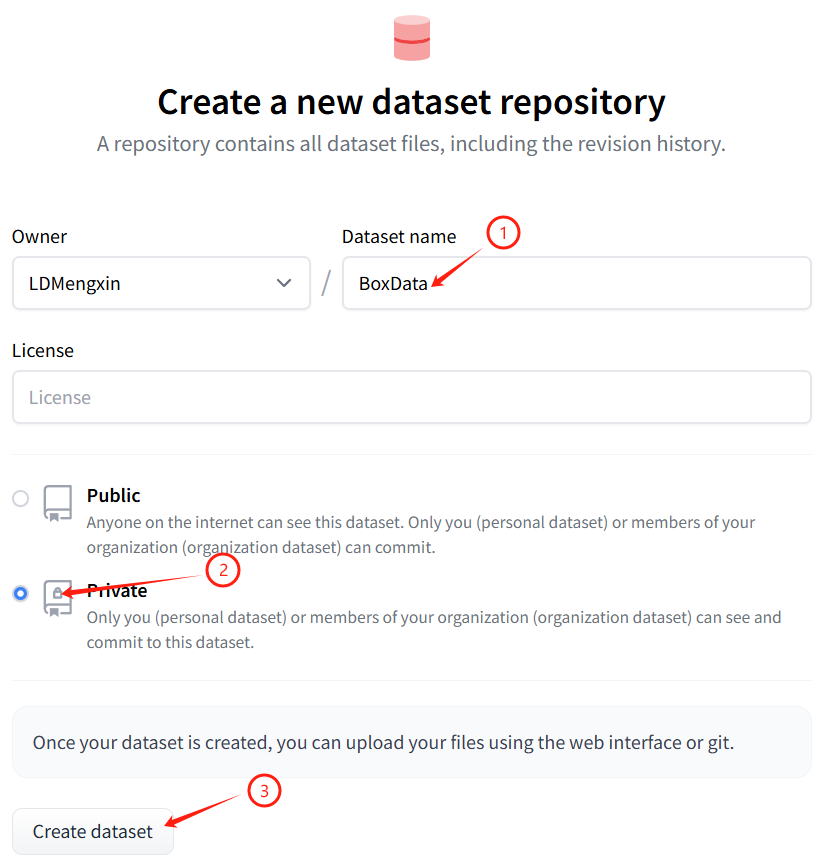

创建dataset数据库

记住你的数据库地址,格式:huggingface用户名称/dataset名称

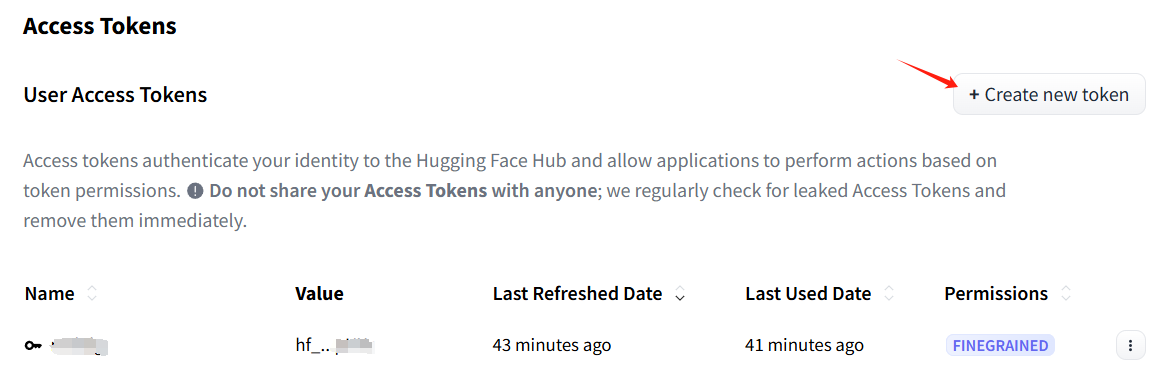

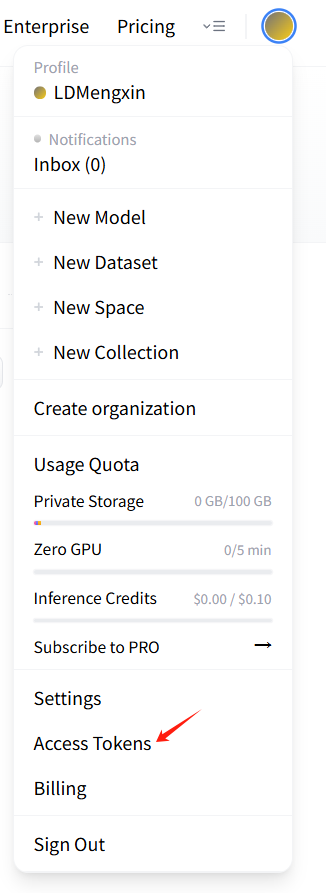

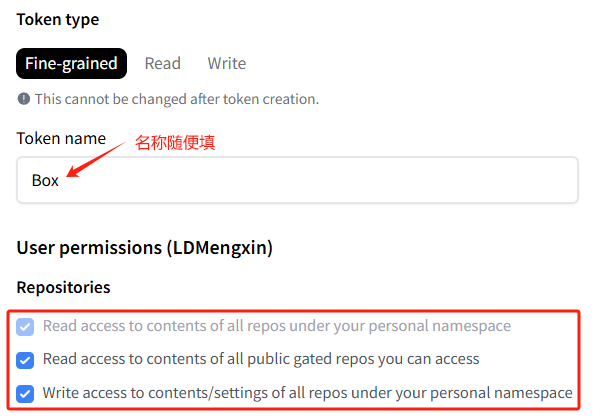

创建HF-token

划到最底下点击

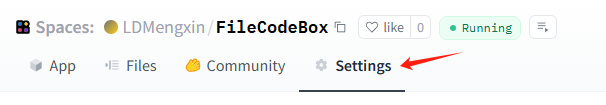

在后台添加环境变量

查看你的dataset 对应填入 DATASET_ID

HF_TOKEN->#你的token

DATASET_ID-># 用户名/数据集名称

SYNC_INTERVAL-># 同步时间(秒钟,推荐填3600)

点击

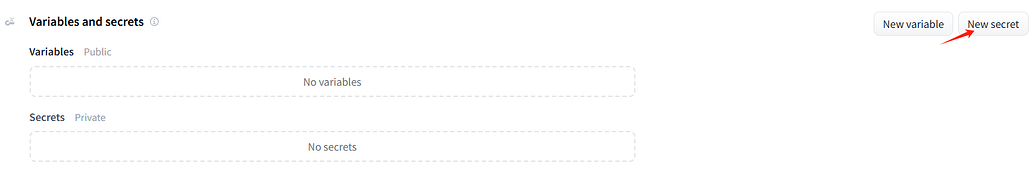

完成后可以在后端改密码,可外接webdav进行东西存储,重启spaces会拉取dataset数据库中的文件进行同步,不用担心数据丢失啦

1后端地址:/#/admin

2

3后台默认密码:FileCodeBox2023

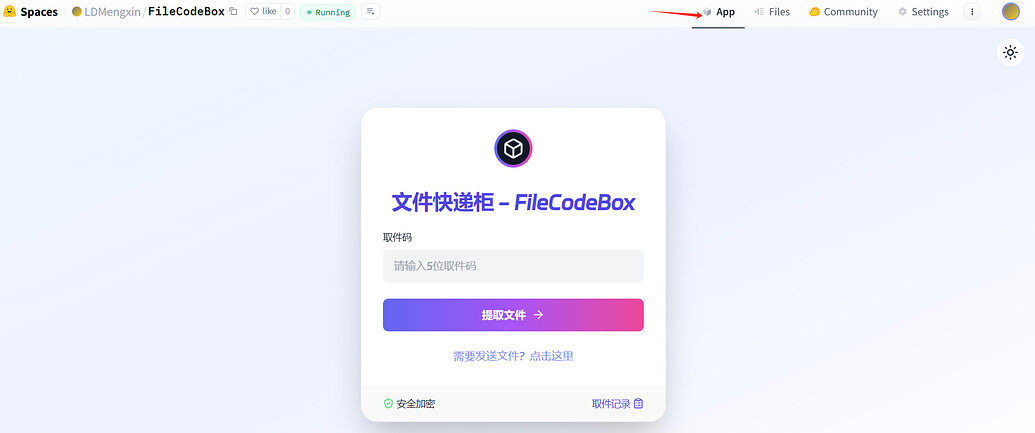

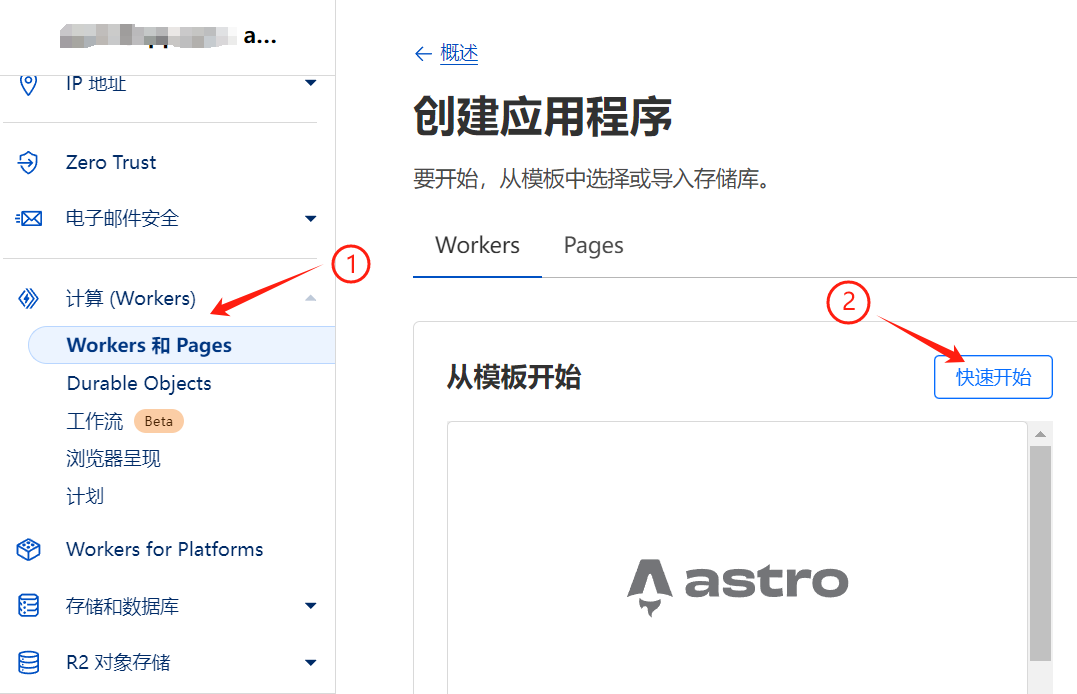

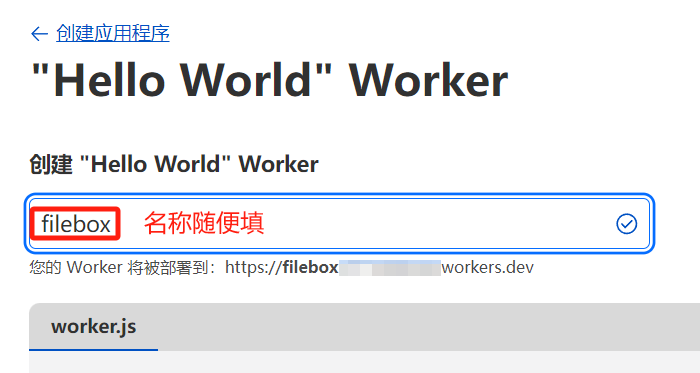

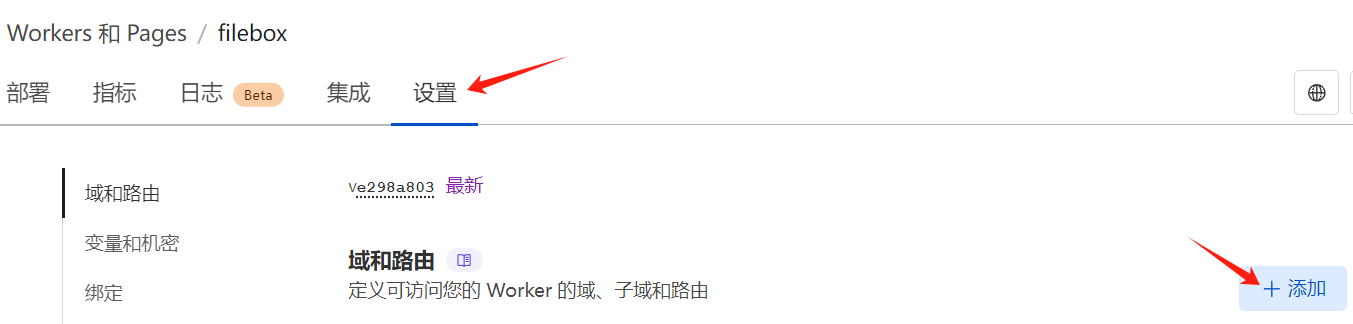

在cloudflare进行反代

然后划到最底下点击

然后点击

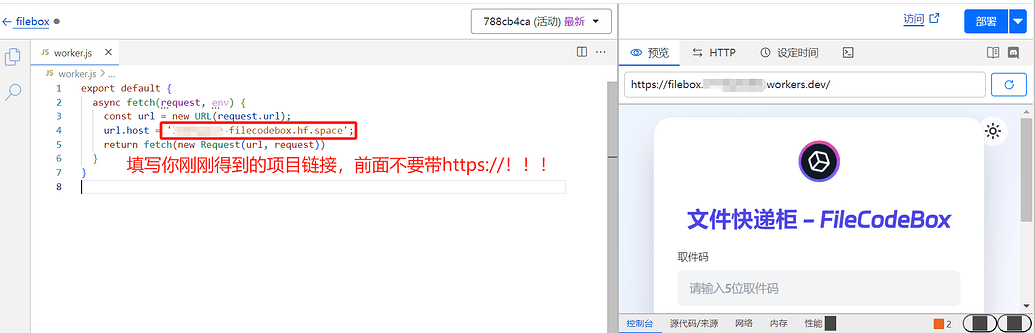

1export default {

2 async fetch(request, env) {

3 const url = new URL(request.url);

4 url.host = '你的地址'; # 注意地址不要有https://

5 return fetch(new Request(url, request))

6 }

7}

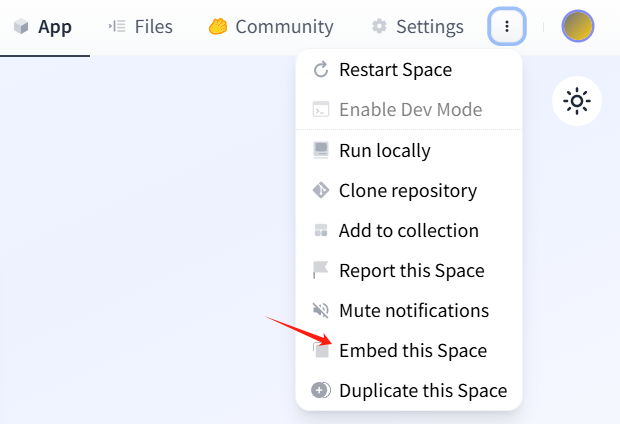

查看你项目的地址:

然后点击右边的